Steve Furber at VCF 2013

This is a transcript of the talk given by Steve Furber at the Vintage Computer Festival (part of Silicon Dreams) held at Snibston Discovery Museum in Coalville, Leicestershire, UK on 06/07/2013.

Steve Furber worked at Acorn Computers from its earliest days until 1990, where he designed the BBC Micro and co-designed the ARM microprocessor. In 1990 he joined the University of Manchester as the ICL professor of Computer Engineering, where his research has included asynchronous (clock-less) processors and most recently SpiNNaker, a massively parallel system to explore the uses and requirements of computing more closely modelled on a biological model.

All text is Steve Furber, apart from questions and answers from the audience marked Q: and A: and some small clarifications marked (Ed.)

All images are clickable for a larger version.

I'm Steve Furber and I'm going to talk about the BBC Micro

and it's heritage, so there's going to be some ancient history and some more

modern stuff in this.

I'm Steve Furber and I'm going to talk about the BBC Micro

and it's heritage, so there's going to be some ancient history and some more

modern stuff in this.

You are the hero of the afternoon for coming inside on what might be the nicest day of the year and also you're missing the Wimbledon Men's final, so thank you for being here.

What I'm planning to talk about are the set of issues listed in this

outline. I'm going to start at, what for me was the beginning, with Acorn,

a bit about the ARM, to give you a feel for how computer technology has

changed in the 60

years that we've had computers, and then to begin to think about where we are

now and I'm going to tell you a little bit about my current research at

Manchester University to finish off with. That's the general plan.

What I'm planning to talk about are the set of issues listed in this

outline. I'm going to start at, what for me was the beginning, with Acorn,

a bit about the ARM, to give you a feel for how computer technology has

changed in the 60

years that we've had computers, and then to begin to think about where we are

now and I'm going to tell you a little bit about my current research at

Manchester University to finish off with. That's the general plan.

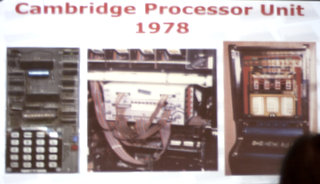

My introduction to computing was at Acorn in Cambridge and this was when Chris Curry

and Herman Hauser decided they wanted to start a small consultancy business based on the

new-fangled microprocessors. Intel introduced the first microprocessor in the early

1970's and by the late 70's the microprocessor was beginning to spread across the world

and Chris and Herman thought they could build a business from it. I was involved in

the university student society called the 'Cambridge University Processor Group' and

Herman came and found me there and asked me if I could get involved in some of their

early work.

My introduction to computing was at Acorn in Cambridge and this was when Chris Curry

and Herman Hauser decided they wanted to start a small consultancy business based on the

new-fangled microprocessors. Intel introduced the first microprocessor in the early

1970's and by the late 70's the microprocessor was beginning to spread across the world

and Chris and Herman thought they could build a business from it. I was involved in

the university student society called the 'Cambridge University Processor Group' and

Herman came and found me there and asked me if I could get involved in some of their

early work.

The first thing we did with CPU Ltd was develop a microprocessor controller for a fruit machine. There's a picture on the right here. The picture in the middle is the controller that we developed.

The fruit machine is a one-armed bandit and that industry was just making the transition from electro-mechanical control to electronic control in the late 70's.

While doing that I was also a little bit involved in the machine on the left. Who recognises the thing on the left?

A: That's a mk14 isn't it?

Yes, it's a Science of Cambridge mk14, I actually prototyped the first of those in my front living room and debugged a little bit of the code because Chris Curry was then setting up Science of Cambridge with Clive Sinclair and the mk14 was what emerged. That's what computers looked like in the late 1970's, or at least the ones you could buy and play with at home.

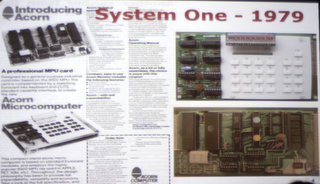

My colleague then, on the CPU side,

Sophie Wilson came in and looked at

the mk14 and said "Urgh, I can do better than that" and she went home over the Easter holiday

and came back with the, quite similar, machine shown on the right hand side here. Instead

of being on a single circuit board it was on two circuit boards. You still had to

be able to use hexadecimal; the keypad is hexadecimal, the display is a 7-segment display,

again showing hex-digits. Sophie designed this two-board system and Chris and Herman decided

they would market it from CPU Ltd but they would market it under the trade name Acorn. So

Acorn was introduced first as a trade name of CPU Ltd, of course later it became the official

company name. Where did Acorn come from? There are lots of myths and legends, but from what

I remember they wanted a name that sounded

a bit like Apple but came earlier in the alphabet, so that was the influence. Now the

Acorn System 1 basically changed CPU's business from being a consultancy to being the

designer and supplier of microprocessor systems for fairly widespread use. I said you

had to understand hexadecimal arithmetic to use this, in fact you also had to

be able to solder, because these things were sold as kits. So the first thing you had

to do was assemble the parts on the circuit board and plug it all in together, somehow

get it working and then start programming in hex.

My colleague then, on the CPU side,

Sophie Wilson came in and looked at

the mk14 and said "Urgh, I can do better than that" and she went home over the Easter holiday

and came back with the, quite similar, machine shown on the right hand side here. Instead

of being on a single circuit board it was on two circuit boards. You still had to

be able to use hexadecimal; the keypad is hexadecimal, the display is a 7-segment display,

again showing hex-digits. Sophie designed this two-board system and Chris and Herman decided

they would market it from CPU Ltd but they would market it under the trade name Acorn. So

Acorn was introduced first as a trade name of CPU Ltd, of course later it became the official

company name. Where did Acorn come from? There are lots of myths and legends, but from what

I remember they wanted a name that sounded

a bit like Apple but came earlier in the alphabet, so that was the influence. Now the

Acorn System 1 basically changed CPU's business from being a consultancy to being the

designer and supplier of microprocessor systems for fairly widespread use. I said you

had to understand hexadecimal arithmetic to use this, in fact you also had to

be able to solder, because these things were sold as kits. So the first thing you had

to do was assemble the parts on the circuit board and plug it all in together, somehow

get it working and then start programming in hex.

For those of you who are old enough to remember that this is about the time of the

Sinclair ZX80 and Acorn and Sinclair were quietly rivals in this field.

Clive Sinclair proudly boasted that you could run a nuclear power station with

one of his ZX80 computers. Acorn, of course, had a counter to that because the

BBC used an Acorn System 1 in this set, who recognises this set? This is the

"Blake's 7" set, this is the command console for the spaceship

that Blake and his 7 colleagues flew around the galaxy in the 22nd

century. There in the middle of the two hemispheres you can, just about, make

out an Acorn System 1. The ZX80 was powerful enough to run a twentieth century

nuclear power station, the Acorn System 1 was powerful enough to run a

22nd century intergalactic cargo ship. The other interesting fact,

and only one person would ever have noticed this,

Sophie told me, that when they went up to this to push the buttons, they

actually pressed the right buttons to start it running a program. The BBC got

that detail right.

For those of you who are old enough to remember that this is about the time of the

Sinclair ZX80 and Acorn and Sinclair were quietly rivals in this field.

Clive Sinclair proudly boasted that you could run a nuclear power station with

one of his ZX80 computers. Acorn, of course, had a counter to that because the

BBC used an Acorn System 1 in this set, who recognises this set? This is the

"Blake's 7" set, this is the command console for the spaceship

that Blake and his 7 colleagues flew around the galaxy in the 22nd

century. There in the middle of the two hemispheres you can, just about, make

out an Acorn System 1. The ZX80 was powerful enough to run a twentieth century

nuclear power station, the Acorn System 1 was powerful enough to run a

22nd century intergalactic cargo ship. The other interesting fact,

and only one person would ever have noticed this,

Sophie told me, that when they went up to this to push the buttons, they

actually pressed the right buttons to start it running a program. The BBC got

that detail right.

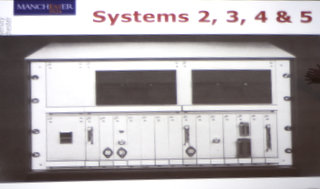

The System 1 was a simple hexadecimal system but it was based on the standard

eurocard format and Acorn designed a number of additional cards to go with that

and developed the System 1 into the, imaginatively named, System 2, 3, 4 and 5

and these were building into quite serious computers. Here you can see what a

late 1970's floppy disc drive looked like, the bottom here I recognise as an

EPROM blower, I'm not sure I can recognise the other cards. You could build

quite a useful industrial control system, starting from the System 1 and then

adding cards into the rack and Acorn

had quite a nice little business selling these systems, but they were quite

expensive.

The System 1 was a simple hexadecimal system but it was based on the standard

eurocard format and Acorn designed a number of additional cards to go with that

and developed the System 1 into the, imaginatively named, System 2, 3, 4 and 5

and these were building into quite serious computers. Here you can see what a

late 1970's floppy disc drive looked like, the bottom here I recognise as an

EPROM blower, I'm not sure I can recognise the other cards. You could build

quite a useful industrial control system, starting from the System 1 and then

adding cards into the rack and Acorn

had quite a nice little business selling these systems, but they were quite

expensive.

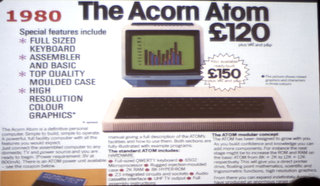

By the time you paid for all this racking and the necessary cards

it was quite a lot of money and Chris Curry had this idea, I suspect

inspired by what Apple had been doing in the USA, that if you could cost

engineer this down sufficiently then you'd end up with a much bigger market

for your product, and that led Chris with Nick Took to design the Acorn Atom,

which was Acorn's first cost machine. Here you see a conventional QWERTY keyboard,

so the need to program in hexadecimal has gone away, it plugs into a standard TV,

using a colour display. In its earliest instance it used audio cassettes for data

and program storage,

but now you could sell a full functional computer for £120 (it says here). Again

the Atom was initially sold as a kit, so the first thing you had to do was solder it

together but then, as this advert suggests, later on it was sold as a fully-built system.

Acorn got into manufacturing, not just the design, of the finished product. There

were some interesting stories, one of the machines that got sent back, that had been sold

as a kit, came with a long explanatory letter saying that the guy who built it had been

extremely careful, he knew that chips were temperature sensitive, so he thought soldering

was a bit dangerous so he glued them all in, very carefully, but it still didn't work. At

that point I think we decided that kits were not the way to go. The market of people who

could be relied upon

to solder these things together was smaller than the potential market. So the Atom was

the last product that Acorn sold in kit form.

By the time you paid for all this racking and the necessary cards

it was quite a lot of money and Chris Curry had this idea, I suspect

inspired by what Apple had been doing in the USA, that if you could cost

engineer this down sufficiently then you'd end up with a much bigger market

for your product, and that led Chris with Nick Took to design the Acorn Atom,

which was Acorn's first cost machine. Here you see a conventional QWERTY keyboard,

so the need to program in hexadecimal has gone away, it plugs into a standard TV,

using a colour display. In its earliest instance it used audio cassettes for data

and program storage,

but now you could sell a full functional computer for £120 (it says here). Again

the Atom was initially sold as a kit, so the first thing you had to do was solder it

together but then, as this advert suggests, later on it was sold as a fully-built system.

Acorn got into manufacturing, not just the design, of the finished product. There

were some interesting stories, one of the machines that got sent back, that had been sold

as a kit, came with a long explanatory letter saying that the guy who built it had been

extremely careful, he knew that chips were temperature sensitive, so he thought soldering

was a bit dangerous so he glued them all in, very carefully, but it still didn't work. At

that point I think we decided that kits were not the way to go. The market of people who

could be relied upon

to solder these things together was smaller than the potential market. So the Atom was

the last product that Acorn sold in kit form.

The Atom sold reasonably well and Acorn were keen to develop a successor and a bunch of

us were sketching a successor. The astute amongst you might be able to recognise me in this

picture, yes I did have hair once upon a time. We were working on this successor design, but

not to any particular agenda, it was called the Proton. The basic idea behind the Proton was

that it would continue to use the 6502 as a front-end processor but it would be designed to

allow second processors to be attached and the standard configuration would have two

processors, that would allow us to incorporate more powerful 16-bit

microprocessors that were

coming along at the time. So this would have been about 1980 when we were doing this work.

As everybody knows, during the Proton development Chris Curry got wind that the BBC were

looking for a computer to use to accompany a series of TV programmes that they wanted to

develop. Acorn was involved in the bidding for this and the offering that we put in was effectively

the front-end of the Proton. So it was designed as dual processor, but the dual processor

system was too expensive for the BBC, so the BBC were persuaded to use just the 6502

front-end to the Proton design. Those of you familiar with the machines will know it kept

its second processor capability, in fact it has a dedicated port for extending out

into a new processor.

The Atom sold reasonably well and Acorn were keen to develop a successor and a bunch of

us were sketching a successor. The astute amongst you might be able to recognise me in this

picture, yes I did have hair once upon a time. We were working on this successor design, but

not to any particular agenda, it was called the Proton. The basic idea behind the Proton was

that it would continue to use the 6502 as a front-end processor but it would be designed to

allow second processors to be attached and the standard configuration would have two

processors, that would allow us to incorporate more powerful 16-bit

microprocessors that were

coming along at the time. So this would have been about 1980 when we were doing this work.

As everybody knows, during the Proton development Chris Curry got wind that the BBC were

looking for a computer to use to accompany a series of TV programmes that they wanted to

develop. Acorn was involved in the bidding for this and the offering that we put in was effectively

the front-end of the Proton. So it was designed as dual processor, but the dual processor

system was too expensive for the BBC, so the BBC were persuaded to use just the 6502

front-end to the Proton design. Those of you familiar with the machines will know it kept

its second processor capability, in fact it has a dedicated port for extending out

into a new processor.

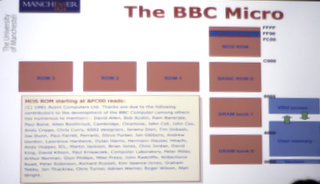

That led to the design for the BBC micro. I guess most people coming to this show

will be reasonably familiar with what's in a BBC Micro, basically it was a 6502 machine,

which meant it had 64KB of address space; try telling that to today's undergraduates that

they only have 64KB to work in, they will not believe it is possible to compute in 64KB today.

Here in hexadecimal is the address space, the bottom half is occupied by DRAM, read-write memory,

where the user program and data lives. The top half was occupied by the ROMs, so the machine

operating system ROM that controls the computer and, in the standard machine, the BBC BASIC ROM,

which was the language you could program the machine in

and if you wanted other languages in addition to BASIC, you could put additional language ROMs in

and switch between them.

So that meant that if you wanted to program the machine you were limited to the bottom 32KB, in fact

you were limited to a small part of that because some of that was occupied by the display. In the highest

resolution display modes, 20KB of this 32KB was used for the display so you had 12KB left for your

program. If you used a lower resolution display you got less used for the display and more for the

program space.

That led to the design for the BBC micro. I guess most people coming to this show

will be reasonably familiar with what's in a BBC Micro, basically it was a 6502 machine,

which meant it had 64KB of address space; try telling that to today's undergraduates that

they only have 64KB to work in, they will not believe it is possible to compute in 64KB today.

Here in hexadecimal is the address space, the bottom half is occupied by DRAM, read-write memory,

where the user program and data lives. The top half was occupied by the ROMs, so the machine

operating system ROM that controls the computer and, in the standard machine, the BBC BASIC ROM,

which was the language you could program the machine in

and if you wanted other languages in addition to BASIC, you could put additional language ROMs in

and switch between them.

So that meant that if you wanted to program the machine you were limited to the bottom 32KB, in fact

you were limited to a small part of that because some of that was occupied by the display. In the highest

resolution display modes, 20KB of this 32KB was used for the display so you had 12KB left for your

program. If you used a lower resolution display you got less used for the display and more for the

program space.

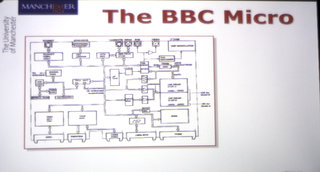

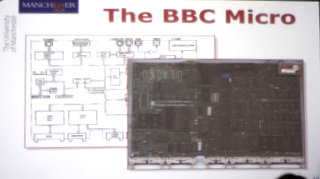

That's the soft view of the BBC Micro, my view was more focused on the hardware, and that is an early

schematic of the organisation of the BBC Micro which materialised

as the main circuit board which

many of you will have seen. It is quite a complex board - it has 102 chips on it; like most machines

of that time these chips were off-the-shelf, produced by the semiconductor industry and the job

of designing machines was the job of selecting parts which you could buy and then assembling

them onto the circuit board to build the system that you wanted to build. In the BBC Micro,

100 of the chips were standard off-the-shelf chips but 2 of them, I'll get this wrong if I'm not

careful, that's the video processor and that's the serial processor. They look exactly the same

but those 2 chips were designed specifically for this machine. They use a customisable

technology from Ferranti

in North Manchester; they called them Uncommitted Logic Arrays, which meant you got a bunch

of logic and you could work out how to configure it yourself. One of these handled the fast end of the

BBC video channel and the other the serial interface and cassette storage system. That was

my introduction to designing my own chips, I had quite a large hand in specifying what went

on these chips. The design tools came from the Cambridge Computer Lab and they were

manufactured, as I said, in Oldham. That got me into chip design, and basically I've been

in chip design ever since. This is what started me on the next 30 years of my career,

designing microchips in various shapes and forms.

That's the soft view of the BBC Micro, my view was more focused on the hardware, and that is an early

schematic of the organisation of the BBC Micro which materialised

as the main circuit board which

many of you will have seen. It is quite a complex board - it has 102 chips on it; like most machines

of that time these chips were off-the-shelf, produced by the semiconductor industry and the job

of designing machines was the job of selecting parts which you could buy and then assembling

them onto the circuit board to build the system that you wanted to build. In the BBC Micro,

100 of the chips were standard off-the-shelf chips but 2 of them, I'll get this wrong if I'm not

careful, that's the video processor and that's the serial processor. They look exactly the same

but those 2 chips were designed specifically for this machine. They use a customisable

technology from Ferranti

in North Manchester; they called them Uncommitted Logic Arrays, which meant you got a bunch

of logic and you could work out how to configure it yourself. One of these handled the fast end of the

BBC video channel and the other the serial interface and cassette storage system. That was

my introduction to designing my own chips, I had quite a large hand in specifying what went

on these chips. The design tools came from the Cambridge Computer Lab and they were

manufactured, as I said, in Oldham. That got me into chip design, and basically I've been

in chip design ever since. This is what started me on the next 30 years of my career,

designing microchips in various shapes and forms.

The Beeb went together with appropriate marketing material, the BBC logo is quite prominent here and that was quite important at the time because the UK had a lot of companies building small home computers. It was quite easy and cheap to set up a company, buy these parts off the shelf, assemble a circuit board, put it in a plastic box and sell it. A lot of companies were doing this, and for the enthusiast and hobbyist this meant there was a rich choice of products, but for people who weren't enthusiasts or hobbyists it was very worrying, because you'd not heard of any of these companies before; they might be fly-by-night, you might buy their computer today and they go bust tomorrow. For Acorn getting the BBC name, the BBC brand on our computer, I think gave the buying public much greater confidence in the viability of the machine and the fact it would be around for a bit. Whether this confidence was well placed or not was an interesting point, Acorn was a small, fragile company just like all the others, but the BBC logo gave the buying public confidence and therefore the machine took off. I also think that the machine was well put together and had a good spec. Some of that was the result of creative tension between Acorn designers and the BBC team, who had some clear ideas about what they wanted the machine to do. I think the tension between the Acorn and BBC team led to a very open machine architecture that was very easy to extend. I like to think it was a good machine, although if you get me in a quiet moment after the talk somewhere I'll tell you about some of the dirty secrets about the things that really worried my about the hardware, and why I was extremely relieved and more than slightly surprised when 1.5 million BBC Micros later they were all still, on the whole, working. There were bits of the design that were extremely close to the edge. The most worrying one was the processor, the 8-bit data bus was about 4 times its specified load, so it was seriously overloaded.

It went quite well, and lots of things were designed to add to it; the original

picture shows a couple of boxes to the left hand side, those boxes hosted things such as the PRESTEL

software receiver so you could download software from the BBC airwaves; remember

in 1982 there was no Internet, well no Internet that most people could access,

so the BBC spent quite a lot of time thinking about how to distribute software.

The second processor was in a similar box, those in the know will realise neither

of those boxes can be a second processor,

because it had to be connected at the other

end to the machine to stick the box with the disk drive to make a second processor

connection.

It went quite well, and lots of things were designed to add to it; the original

picture shows a couple of boxes to the left hand side, those boxes hosted things such as the PRESTEL

software receiver so you could download software from the BBC airwaves; remember

in 1982 there was no Internet, well no Internet that most people could access,

so the BBC spent quite a lot of time thinking about how to distribute software.

The second processor was in a similar box, those in the know will realise neither

of those boxes can be a second processor,

because it had to be connected at the other

end to the machine to stick the box with the disk drive to make a second processor

connection.

The device was extensible, a complicated specification in league with the BBC,

but perhaps most important was the BBC BASIC language, which was entirely

written by Sophie Wilson who had written BASIC for the Atom before this, but

the specification was something the BBC had very strong views about. That was

one of the points where the BBC had quite a lot of input into the ultimate

performance of the machine and it was BBC BASIC, I think, as much as anything

that made the machine so readily usable by such a wide audience.

The device was extensible, a complicated specification in league with the BBC,

but perhaps most important was the BBC BASIC language, which was entirely

written by Sophie Wilson who had written BASIC for the Atom before this, but

the specification was something the BBC had very strong views about. That was

one of the points where the BBC had quite a lot of input into the ultimate

performance of the machine and it was BBC BASIC, I think, as much as anything

that made the machine so readily usable by such a wide audience.

There were ancillary components - there is a BBC branded audio cassette deck.

I don't know how many of you have ever seriously tried to use a computer using an

audio cassette to store the programs on. I see some people are confessing. It

is horrendous, I don't recommend it.

There were ancillary components - there is a BBC branded audio cassette deck.

I don't know how many of you have ever seriously tried to use a computer using an

audio cassette to store the programs on. I see some people are confessing. It

is horrendous, I don't recommend it.

There is the Telesoftware system, using a few spare lines in the CEEFAX you could upload code and then there is the 6502 second processor which is the box on the right-hand side, as the connector is just underneath there.

What else have we got in here? The TV programme series was the reason for the machine

in the first place, there were things like hardware music synthesizers to go with the

BBC Micro, the Music 500. It was at a time when the general feeling was that the way

to do music with a computer was to understand the dynamics of

the notes of an instrument and synthesize the dynamics from first principles. Of

course today this is not done very much, most of the time it's done by sampling - you

simply record samples and replay them, but in those days the samples were built from

first principles. Word-processors, the Acorn offering was VIEW.

What else have we got in here? The TV programme series was the reason for the machine

in the first place, there were things like hardware music synthesizers to go with the

BBC Micro, the Music 500. It was at a time when the general feeling was that the way

to do music with a computer was to understand the dynamics of

the notes of an instrument and synthesize the dynamics from first principles. Of

course today this is not done very much, most of the time it's done by sampling - you

simply record samples and replay them, but in those days the samples were built from

first principles. Word-processors, the Acorn offering was VIEW.

Magazines came out in great profusion,

Acorn User was one of the ones that supported the machine, slightly more specialist ones

were BBC Micro User and then of course there were the games.

Magazines came out in great profusion,

Acorn User was one of the ones that supported the machine, slightly more specialist ones

were BBC Micro User and then of course there were the games.

Anyone who knows the history of the BBC Micro will know about the role it played in the development of the game Elite by David Braben and the role that game played in establishing the multi-zillion pound UK computer games industry that we have today.

The Beeb wasn't the only machine, in fact for many people the Spectrum was the more important games machine, but I think the developers preferred developing on the Beeb, it was a nicer development platform, and Elite was hugely impressive.

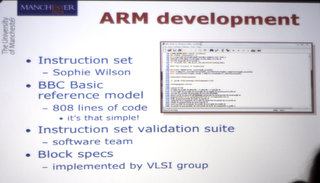

That was the BBC Micro, directly related to that was the emergence of the ARM processor. The BBC

Micro was selling very nicely in 1982-83 and within Acorn we were again thinking about what the

next machine was going to look like. With the Beeb we built lots of second processors for 16032,

68000 and other 16-bit processors around at the time, but we didn't like any of them, to be fair

we were getting a bit cocky,

most of the things we tried to do didn't work. If we don't like the processors we can buy, maybe

we should think about designing our own. This is a very odd thing for a company the size of Acorn

to do, at that time. The big semiconductor companies had teams of hundreds of people building

microprocessors; we had quite close connections with then, it seemed to take many iterations. Then

we heard about this idea from academics in the US, the idea was the Reduced Instruction Set Computer

and this was basically saying that the way all these big companies were designing microprocessors

was not the right way to design a single chip computer, they should be aiming for something that was much

simpler, and they demonstrated this, particularly at Berkeley by having a post-graduate class

design a microprocessor in a year that was competitive with the best industrial offerings.

At Acorn we picked up on that idea and set about developing the ARM. ARM stands for

Acorn RISC Machine, Reduced Instruction Set Computer is ARM's middle name. It's a very

simple processor. Sophie Wilson (again) drafted the instruction set. I wrote the BBC BASIC

reference model, the entire processor was modelled in BBC BASIC. I thought the model was

lost until 2-3 years ago when I found it on a floppy disc in my garage. It took me quite

a long time to read this floppy disc, because it was a BBC floppy disc, and I don't generally

have BBCs lying around operational, but on there was the original ARM reference model, 808 lines

of code, the processor and the test environment. When you turn it into today's high technology

design systems in Verilog it comes to 10000 lines of code.

That was the BBC Micro, directly related to that was the emergence of the ARM processor. The BBC

Micro was selling very nicely in 1982-83 and within Acorn we were again thinking about what the

next machine was going to look like. With the Beeb we built lots of second processors for 16032,

68000 and other 16-bit processors around at the time, but we didn't like any of them, to be fair

we were getting a bit cocky,

most of the things we tried to do didn't work. If we don't like the processors we can buy, maybe

we should think about designing our own. This is a very odd thing for a company the size of Acorn

to do, at that time. The big semiconductor companies had teams of hundreds of people building

microprocessors; we had quite close connections with then, it seemed to take many iterations. Then

we heard about this idea from academics in the US, the idea was the Reduced Instruction Set Computer

and this was basically saying that the way all these big companies were designing microprocessors

was not the right way to design a single chip computer, they should be aiming for something that was much

simpler, and they demonstrated this, particularly at Berkeley by having a post-graduate class

design a microprocessor in a year that was competitive with the best industrial offerings.

At Acorn we picked up on that idea and set about developing the ARM. ARM stands for

Acorn RISC Machine, Reduced Instruction Set Computer is ARM's middle name. It's a very

simple processor. Sophie Wilson (again) drafted the instruction set. I wrote the BBC BASIC

reference model, the entire processor was modelled in BBC BASIC. I thought the model was

lost until 2-3 years ago when I found it on a floppy disc in my garage. It took me quite

a long time to read this floppy disc, because it was a BBC floppy disc, and I don't generally

have BBCs lying around operational, but on there was the original ARM reference model, 808 lines

of code, the processor and the test environment. When you turn it into today's high technology

design systems in Verilog it comes to 10000 lines of code.

I thought, having found this reference model, this is quite a nice piece of historical BBC BASIC, I'll put it up on the Internet, I'll just check with my friends at ARM that they're happy for me to do this and I immediately got back the reply "No, this is commercially confidential", so I'm afraid it's not in the public domain at the moment.

We had a bunch of software people, 2-3 who wrote validation suites for the software; then from the reference model we wrote specifications of the various bits of the processor and handed them to the team of 3 VLSI designers who put the silicon design together. All the time we were doing this we thought this RISC idea is so obviously a good idea, the academics had proven it, that the industry would take it up and we'd get trampled underfoot, because we could put half a dozen people on this job and the semiconductor companies could put 200-300 on the job, so we'd get wiped out. But, we thought, in the process of trying this we'd learn something about processor design and then when we'd go to buy our next processor we'll know a lot more about what we were doing.

Nobody was more surprised than us than when, 18 months later, we had a working microprocessor in our hands and industry simply hadn't followed this idea through at all. The industry in the mid-80's had written off the idea of the Reduced Instruction Set Computer as being an academic irrelevance and as not commercially relevant.

So we ended up with a working processor. There has been lots of retrospective analyses of how Acorn, this tiny British company with no microprocessor track record, managed to pull a microprocessor out of the hat when the big companies took hundreds of man years to do things that were less effective.

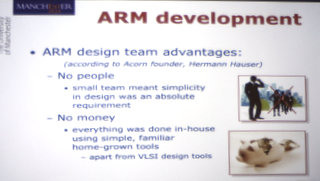

Herman (Hauser), of course, has rewritten history appropriately. Herman

says we have two clear advantages over the competition: the first 'No people', and the second 'No money'.

These advantages are a bit more real than they sound. We had such a small team designing this that

keeping the design simple was imperative, otherwise we simply wouldn't get to the end of it; we'd never

complete it and end with nothing at all. Particularly the very early ARM designs had simplicity imposed

on them and impressed on them with great force. The second thing, no money, so everything was

home-grown; I said I built the reference model in BBC BASIC, that was a home-grown tool, all the other

chips in the chip-set

that were required to build the computer, we built an event-driven simulator in BBC BASIC, the whole thing

was done in BASIC, the only exception to this was that we bought in some chip design tools from VLSI

Technology. What specifically to do with the ARM, this kind of strategic investment, we'd seen that the ULAs

on the BBC Micro were adding to a lot of value and we wanted to get better at including chips in our

computer designs.

Herman (Hauser), of course, has rewritten history appropriately. Herman

says we have two clear advantages over the competition: the first 'No people', and the second 'No money'.

These advantages are a bit more real than they sound. We had such a small team designing this that

keeping the design simple was imperative, otherwise we simply wouldn't get to the end of it; we'd never

complete it and end with nothing at all. Particularly the very early ARM designs had simplicity imposed

on them and impressed on them with great force. The second thing, no money, so everything was

home-grown; I said I built the reference model in BBC BASIC, that was a home-grown tool, all the other

chips in the chip-set

that were required to build the computer, we built an event-driven simulator in BBC BASIC, the whole thing

was done in BASIC, the only exception to this was that we bought in some chip design tools from VLSI

Technology. What specifically to do with the ARM, this kind of strategic investment, we'd seen that the ULAs

on the BBC Micro were adding to a lot of value and we wanted to get better at including chips in our

computer designs.

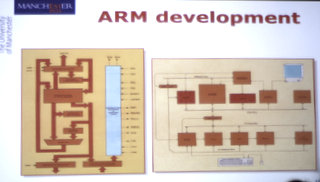

From all this the ARM emerged, the picture on the left is the internal micro-architecture of the

ARM, I was responsible for this and the design methodology I can outline very quickly, I sketched

something that looked like that on a piece of paper, went to a photocopier and made 50 copies of that

piece of paper and I then started going through Sophie's instruction set, colouring it in, to show

what was doing what in which cycle

of each instruction until something didn't work very well. When something didn't work very well, I

thought about it a bit, rubbed out some of the drawings, changed it, went back to the photocopier

and started again. I think that was how you designed micro-architectures then.

From all this the ARM emerged, the picture on the left is the internal micro-architecture of the

ARM, I was responsible for this and the design methodology I can outline very quickly, I sketched

something that looked like that on a piece of paper, went to a photocopier and made 50 copies of that

piece of paper and I then started going through Sophie's instruction set, colouring it in, to show

what was doing what in which cycle

of each instruction until something didn't work very well. When something didn't work very well, I

thought about it a bit, rubbed out some of the drawings, changed it, went back to the photocopier

and started again. I think that was how you designed micro-architectures then.

It was always designed as one of four chips to build a complete computer. The BBC Micro has 102 chips; what we wanted was to build something, a successor that had maybe 20 or 30 chips. We had the ARM processor, a memory controller, a video controller that drove the display directly and an IO controller. Those were the four chips that were designed to go together. You then need some memory parts, a few peripherals and you'd have yourself a computer.

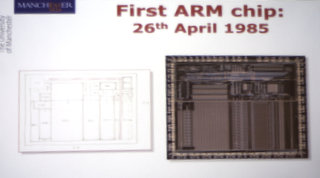

The first silicon arrived on 26 April 1985. The picture on the left is my rough

hand sketch of how the thing should go on the chip, the picture on the right is how it actually

did go on the chip. They're quite similar, but not identical; you'd probably have to look

for quite a long time to spot the difference.

The first silicon arrived on 26 April 1985. The picture on the left is my rough

hand sketch of how the thing should go on the chip, the picture on the right is how it actually

did go on the chip. They're quite similar, but not identical; you'd probably have to look

for quite a long time to spot the difference.

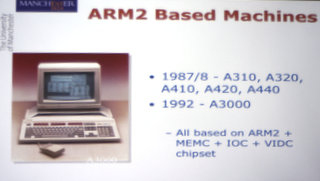

We had a working RISC processor in our hands, 25000 transistors, that

outperformed the Motorola 68020 that had 250000. Now we had to put these into

some machines. There were a series of machines built using the second version

of the ARM processor, the ARM2, as we were making the other three chips

we re-engineered the processor onto a slightly smaller process technology.

The earliest machines were the A310 Archimedes and that went through to the

early 1990's with the A3000. These were all based on the complete four chip

set.

We had a working RISC processor in our hands, 25000 transistors, that

outperformed the Motorola 68020 that had 250000. Now we had to put these into

some machines. There were a series of machines built using the second version

of the ARM processor, the ARM2, as we were making the other three chips

we re-engineered the processor onto a slightly smaller process technology.

The earliest machines were the A310 Archimedes and that went through to the

early 1990's with the A3000. These were all based on the complete four chip

set.

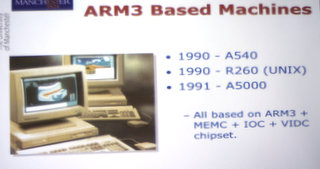

Toward the end of the 80's we put cache memory on the processor, little

bits of local fast memory, that made it go a lot faster and this was the ARM3,

that came out in another series of machines with the same three support chips but

with 2-5 times the performance (depending on how you configured it).

Toward the end of the 80's we put cache memory on the processor, little

bits of local fast memory, that made it go a lot faster and this was the ARM3,

that came out in another series of machines with the same three support chips but

with 2-5 times the performance (depending on how you configured it).

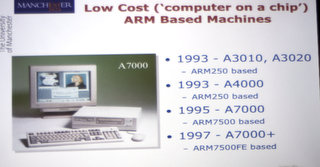

Later in the 90's the four chips were put together onto a single chip, this

was early system-on-chip development and led to a series of ARM machines

starting from the A3000 series (Ed. The Acorn A3010, A3020, A4000)

and ending up in 1997 with something that has hardware floating point on it (Ed. The Acorn A7000+ with the Cirrus Logic ARM7500FE).

Later in the 90's the four chips were put together onto a single chip, this

was early system-on-chip development and led to a series of ARM machines

starting from the A3000 series (Ed. The Acorn A3010, A3020, A4000)

and ending up in 1997 with something that has hardware floating point on it (Ed. The Acorn A7000+ with the Cirrus Logic ARM7500FE).

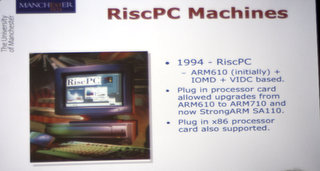

Finally at the end of the Acorn story in 90's, the Risc PC came out with various microprocessor options and configurations

and you could even plug in an x86 card if you really had no taste at all.

Finally at the end of the Acorn story in 90's, the Risc PC came out with various microprocessor options and configurations

and you could even plug in an x86 card if you really had no taste at all.

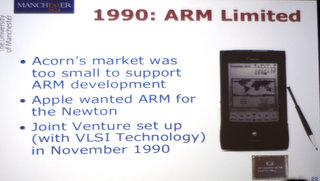

As Acorn continued producing these series of machines, I left Acorn to go to Manchester in 1990

and a few months later that year the ARM activity was spun out of Acorn into a joint venture

with Apple, called ARM Ltd. The rationale here was that Acorn's market for these processors

was effectively defined by the UK government education budget for computers and that market

was too small to sustain the processor development at the level that was required to keep

it competitive. Acorn was looking for some way to offload the cost of this development,

in fact during my last two years at Acorn I spent some time looking at business plans to

move the ARM activity out of the company,

I couldn't make any of these work, the numbers just didn't pan out. Then in

1990 Apple approached Acorn and they wanted to use the ARM in their Newton

product, and what they found was they were pushing on an open door. They set

up this joint venture; they wanted ARM outside Acorn, they saw Acorn as a

competitor, so the joint venture was set up to develop the ARM technology,

initially for it's customers Acorn and Apple. The ARM went into the Apple

Newton, today we don't remember the Newton as a success, because it was about

10 years ahead of its time. It was not viewed as a particular success by Apple,

but it was the thing that made ARM. Then, as now, the Apple name was an

extremely strong brand, and the fact that ARM had the Apple business opened

up a whole lot of other doors. By the time

it was becoming clear that the Newton was not going to proceed, it didn't matter any more.

In the early days of ARM the Apple link was crucial.

As Acorn continued producing these series of machines, I left Acorn to go to Manchester in 1990

and a few months later that year the ARM activity was spun out of Acorn into a joint venture

with Apple, called ARM Ltd. The rationale here was that Acorn's market for these processors

was effectively defined by the UK government education budget for computers and that market

was too small to sustain the processor development at the level that was required to keep

it competitive. Acorn was looking for some way to offload the cost of this development,

in fact during my last two years at Acorn I spent some time looking at business plans to

move the ARM activity out of the company,

I couldn't make any of these work, the numbers just didn't pan out. Then in

1990 Apple approached Acorn and they wanted to use the ARM in their Newton

product, and what they found was they were pushing on an open door. They set

up this joint venture; they wanted ARM outside Acorn, they saw Acorn as a

competitor, so the joint venture was set up to develop the ARM technology,

initially for it's customers Acorn and Apple. The ARM went into the Apple

Newton, today we don't remember the Newton as a success, because it was about

10 years ahead of its time. It was not viewed as a particular success by Apple,

but it was the thing that made ARM. Then, as now, the Apple name was an

extremely strong brand, and the fact that ARM had the Apple business opened

up a whole lot of other doors. By the time

it was becoming clear that the Newton was not going to proceed, it didn't matter any more.

In the early days of ARM the Apple link was crucial.

ARM was very nicely timed: it was set up in 1990, just when systems-on-chip, the idea

that on a chip you put, not just the processor, but the other bits and pieces you need to

make a product, you put them all on the same chip. There are cost benefits in doing this,

but it's only possible when the technology gives you enough transistors. ARM had a big

advantage in this game because it was small, it used fewer transistors so it left more silicon

resource for all the other stuff you needed to build a product. ARM was very well timed for

system-on-chip and the most important system-on-chip business was the mobile phone

handset. ARM really got into the big time when they got the Nokia contract

in the mid 1990's when Nokia was the world's biggest handset manufacturer. The

key to their economic survival was working out how to escape the iron grip of

their two big owners, Apple and Acorn, and secondly how to make a business

model that kept them financially solvent, this was the thing that we couldn't

work out how to do in the late 80's. Robin Saxby's contribution here was to

introduce a novel business model that had a 'join the club' fee, so if you

wanted to use an ARM in your product, you would pay royalties downstream

according to the number you use but you'd also pay a big slug of money up

front just for the right to use it. Of course the royalties that come

downstream are terrible for cash-flow - it takes a very long time until they

start arriving at all, then they come in a trickle, and it's many years before

they're a significant sum of revenue.

The 'join the club' fee is a big slug of money up front, and is wonderful for

cash-flow and that's the thing that made the ARM business work. By 'big slug of

money', it's in the order of millions of dollars, which is what you would pay to

join the ARM club.

ARM was very nicely timed: it was set up in 1990, just when systems-on-chip, the idea

that on a chip you put, not just the processor, but the other bits and pieces you need to

make a product, you put them all on the same chip. There are cost benefits in doing this,

but it's only possible when the technology gives you enough transistors. ARM had a big

advantage in this game because it was small, it used fewer transistors so it left more silicon

resource for all the other stuff you needed to build a product. ARM was very well timed for

system-on-chip and the most important system-on-chip business was the mobile phone

handset. ARM really got into the big time when they got the Nokia contract

in the mid 1990's when Nokia was the world's biggest handset manufacturer. The

key to their economic survival was working out how to escape the iron grip of

their two big owners, Apple and Acorn, and secondly how to make a business

model that kept them financially solvent, this was the thing that we couldn't

work out how to do in the late 80's. Robin Saxby's contribution here was to

introduce a novel business model that had a 'join the club' fee, so if you

wanted to use an ARM in your product, you would pay royalties downstream

according to the number you use but you'd also pay a big slug of money up

front just for the right to use it. Of course the royalties that come

downstream are terrible for cash-flow - it takes a very long time until they

start arriving at all, then they come in a trickle, and it's many years before

they're a significant sum of revenue.

The 'join the club' fee is a big slug of money up front, and is wonderful for

cash-flow and that's the thing that made the ARM business work. By 'big slug of

money', it's in the order of millions of dollars, which is what you would pay to

join the ARM club.

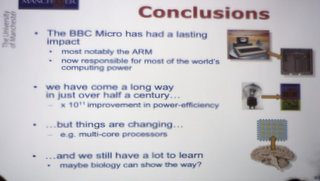

From that start ARM has grown to dominate the global business in embedded systems and systems-on-chip, with, something like, 98% of all mobile phone handsets are ARM powered. I believe today that 75% of all clients connected to the internet are ARM powered, so it's not just in the mobile market, it's in many other places.

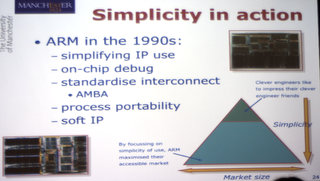

There are lots of ways of looking at ARMs success in the 1990s, remember

this is after I've left Acorn, I'm in Manchester at this time,

this is what the guys who stayed on in Cambridge achieved. They didn't do

the obvious thing, they didn't spend a lot of time working out how to make

the processor go faster, in fact they put surprisingly little resource into

expanding the architecture upwards. What they did, they seemed to understand

very well, was that they had to make it easier to use. I have this picture

on the bottom-right of this slide which explains one view of their success,

that it's very tempting if you have a company with a bunch of clever engineers

in that they design and develop things to impress their clever engineer friends, but

the number of clever engineers out there who can then buy your product is quite small.

The more you can make this easier to use, so that your customers don't need

clever engineers to use it, so that you can have regular normal engineers, the

bigger the market gets. So the simpler you make it

the broader the market gets. This is the thing I think ARM did exactly right

in the 1990s, they put lots of work into simplifying what it took to use

their Intellectual Property, their IP. They put a lot of effort into working

out how to make programs work on it, all software engineers know they have to

debug their programs, but once the processor is buried in a mobile phone how

do you debug it then? ARM put a lot of work into that. They put a lot of

work into standardising the interconnects, for assembling the components you

need on a chip, was easy. And making the design portable between different

processes, so different manufacturers could make it.

There are lots of ways of looking at ARMs success in the 1990s, remember

this is after I've left Acorn, I'm in Manchester at this time,

this is what the guys who stayed on in Cambridge achieved. They didn't do

the obvious thing, they didn't spend a lot of time working out how to make

the processor go faster, in fact they put surprisingly little resource into

expanding the architecture upwards. What they did, they seemed to understand

very well, was that they had to make it easier to use. I have this picture

on the bottom-right of this slide which explains one view of their success,

that it's very tempting if you have a company with a bunch of clever engineers

in that they design and develop things to impress their clever engineer friends, but

the number of clever engineers out there who can then buy your product is quite small.

The more you can make this easier to use, so that your customers don't need

clever engineers to use it, so that you can have regular normal engineers, the

bigger the market gets. So the simpler you make it

the broader the market gets. This is the thing I think ARM did exactly right

in the 1990s, they put lots of work into simplifying what it took to use

their Intellectual Property, their IP. They put a lot of effort into working

out how to make programs work on it, all software engineers know they have to

debug their programs, but once the processor is buried in a mobile phone how

do you debug it then? ARM put a lot of work into that. They put a lot of

work into standardising the interconnects, for assembling the components you

need on a chip, was easy. And making the design portable between different

processes, so different manufacturers could make it.

At the end of the 90's the story was moving away from the idea of Hard IP, physical processors, into Soft IP, where the processor is described by a language that the customer can synthesize on their target process. I think this is the thing ARM did that was unusual, they had an unusual business model and they had an unusual approach to getting their product used, which was to make it as easy as possible to use.

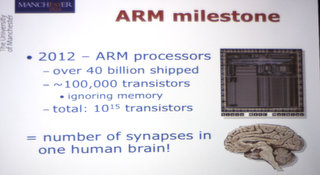

20 years later, the ARM has got itself established, it's at the leading

edge of global computing. The last number I heard for total ARM processors

shipped to date was 44 billion, 7 for every human being on the planet. I want

you to check around your pockets to make sure you've got your 7 ARM processors

somewhere to hand. I think there are at least 6 ARM processors in one of those (points at phone)

so they can rack up fairly quickly.

20 years later, the ARM has got itself established, it's at the leading

edge of global computing. The last number I heard for total ARM processors

shipped to date was 44 billion, 7 for every human being on the planet. I want

you to check around your pockets to make sure you've got your 7 ARM processors

somewhere to hand. I think there are at least 6 ARM processors in one of those (points at phone)

so they can rack up fairly quickly.

One of the impressive statistics for ARM in 2012: last year there were more ARM processors shipped in one year than Intel has shipped in its entire 45 year history, so they're outselling Intel now by a significant number. Not just if you count processors, but value as well if you look at the amount delivered.

Here's a random statistic, that I consider to be in the Douglas Adams style of random statistics: if you take all that vast number of ARM processors, each of which uses of the order of 100000 transistors, excluding memory parts. Then you get to a total number of transistors of in all the ARM processors that ever shipped, you get a very big number in the order of 10^16, a million billion transistors in all those ARM processors. That just happens to be equivalent to the number of synapses, connections between neurons, each of us have inside our heads. You have as many connections inside your head as all the transistors in that vast number of ARM processors, and the synapse is a much more powerful component than a transistor. Your brain is more capable than all the ARM processors put together, so think about that the next time you're down the pub.

I want to put this progress in context, 60 years, why 60 years? I'm from

Manchester and therefore can't get this sort of this talk without talking

about the Manchester Baby, which is the first computer in the world to run a

program stored in its own electronic memory, the world's first stored-program

computer, and in some sense the forerunner of all today's computers.

I want to put this progress in context, 60 years, why 60 years? I'm from

Manchester and therefore can't get this sort of this talk without talking

about the Manchester Baby, which is the first computer in the world to run a

program stored in its own electronic memory, the world's first stored-program

computer, and in some sense the forerunner of all today's computers.

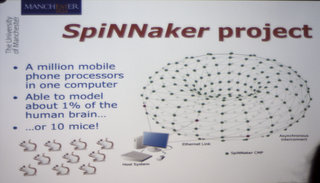

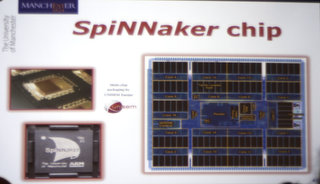

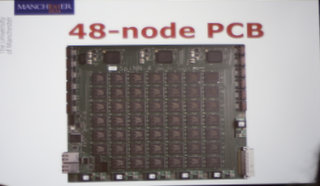

A more recent Manchester computer is one that my group has designed on the SpiNNaker

system, that I hope to come back to at the end of the talk, but for these purposes

just think of it as a typical energy-efficient processor that you might find in your

mobile phone.

A more recent Manchester computer is one that my group has designed on the SpiNNaker

system, that I hope to come back to at the end of the talk, but for these purposes

just think of it as a typical energy-efficient processor that you might find in your

mobile phone.

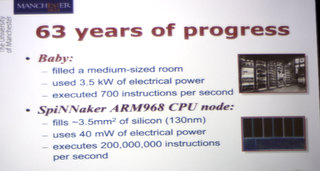

There are 63 years between those two machines, Baby was big, it was 7 feet tall,

about the width of this screen, lined up in 7 post-office racks, it used

3.5kW of power with which you can execute 700 instructions per second, 700 adds,

data moves etc.

There are 63 years between those two machines, Baby was big, it was 7 feet tall,

about the width of this screen, lined up in 7 post-office racks, it used

3.5kW of power with which you can execute 700 instructions per second, 700 adds,

data moves etc.

The SpiNNaker processor, similar to your mobile phone, is a few square millimetres, tiny, it uses 40mW of electrical power and with that will execute some 200 million instructions per second.

One interesting thing to note, is the word instruction has not changed in 63 years The Baby was a 32-bit binary machine, the ARM that we use on SpiNNaker is a 32-bit binary machine, and instructions is pretty the same right at the beginning. The ARM has a slightly wider choice of instructions, but fundamentally they're the same.

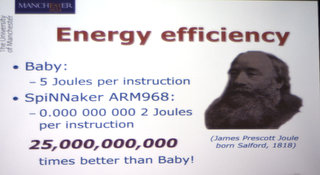

So

we can compare those two machines in terms of energy efficiency, if you do the

sums on the numbers from the previous slide you'll find that Baby uses 5 Joules per

instruction, and the SpiNNaker uses something with lots of zeroes after the decimal

point, and the ratio of those two numbers is something like 25 billion. So computers

have improved in their energy efficiency by a factor of 25 billion over half a century

and this is formidable progress. It's such a big number it's hard to get your head

around it. The one example that I think is useful, the UK's road transport fleet,

all the cars, buses and lorries in the country,

use about 50 billion litres of fuel a year, so if the car industry had improved the fuel

efficiency at the same rate as the fuel efficiency of computers, we could run the

entire country off about two litres of fuel, instead of requiring hundreds of supertankers

and pipelines around the world, I know there are fundamental reasons why improving

the energy efficiency is harder, but I'm still surprised that they've only started working

on this seriously quite recently, but it gives you a feel for the scale of the number.

So

we can compare those two machines in terms of energy efficiency, if you do the

sums on the numbers from the previous slide you'll find that Baby uses 5 Joules per

instruction, and the SpiNNaker uses something with lots of zeroes after the decimal

point, and the ratio of those two numbers is something like 25 billion. So computers

have improved in their energy efficiency by a factor of 25 billion over half a century

and this is formidable progress. It's such a big number it's hard to get your head

around it. The one example that I think is useful, the UK's road transport fleet,

all the cars, buses and lorries in the country,

use about 50 billion litres of fuel a year, so if the car industry had improved the fuel

efficiency at the same rate as the fuel efficiency of computers, we could run the

entire country off about two litres of fuel, instead of requiring hundreds of supertankers

and pipelines around the world, I know there are fundamental reasons why improving

the energy efficiency is harder, but I'm still surprised that they've only started working

on this seriously quite recently, but it gives you a feel for the scale of the number.

The unit of energy is the Joule, time for a Manchester link, the bloke with the impressive beard is James Prescott Joule, born in Salford, which I think I'm far enough away from home, that I can say is part of Manchester, a dangerous statement, there are two different football teams involved I think.

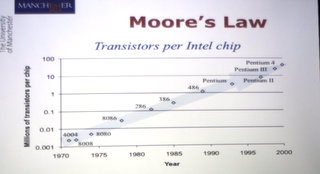

We know what's driven all this, Moore's Law, Gordon Moore observed

the number of transistors that could be put on a chip was doubling every 18 months to

2 years. That observation was back in 1965, and effectively that rule guided the industry

ever since. If you double every two years then in 20 years you have 10 doublings which is

a factor of 1000, in 40 years you have 20 doublings which is a factor of 1000000.

We know what's driven all this, Moore's Law, Gordon Moore observed

the number of transistors that could be put on a chip was doubling every 18 months to

2 years. That observation was back in 1965, and effectively that rule guided the industry

ever since. If you double every two years then in 20 years you have 10 doublings which is

a factor of 1000, in 40 years you have 20 doublings which is a factor of 1000000.

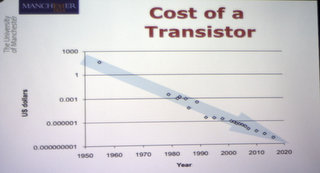

Things grow

at a formidable rate and it's not just the number of transistors but also the cost. Back in the 1950's

the first transistors cost about 500 dollars each, today, this is a logarithmic graph, so every

time this arrow crosses the horizontal lines it's a factor 1000 reduction in the cost of a transistor.

So we have had a reduction of more than 3 times the factor of 1000. That delivered the kind

of technology that we now take for granted.

Things grow

at a formidable rate and it's not just the number of transistors but also the cost. Back in the 1950's

the first transistors cost about 500 dollars each, today, this is a logarithmic graph, so every

time this arrow crosses the horizontal lines it's a factor 1000 reduction in the cost of a transistor.

So we have had a reduction of more than 3 times the factor of 1000. That delivered the kind

of technology that we now take for granted.

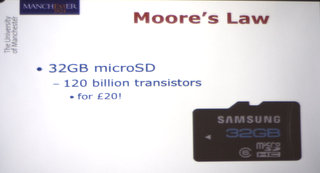

Today you can buy a 32GB MicroSD card which contains 120 billion transistors for about £20. This means

I can now carry my entire CD collection on my mobile phone for no good reason, other than I can. I

do remember in the 1980's having debates with my colleagues whether it would be possible to put a

single track of music into solid state memory, and back in the 80's we, who were in the business,

thought it would be possible, but it was worth an argument back then. And now you can get these

things for next to nothing.

Today you can buy a 32GB MicroSD card which contains 120 billion transistors for about £20. This means

I can now carry my entire CD collection on my mobile phone for no good reason, other than I can. I

do remember in the 1980's having debates with my colleagues whether it would be possible to put a

single track of music into solid state memory, and back in the 80's we, who were in the business,

thought it would be possible, but it was worth an argument back then. And now you can get these

things for next to nothing.

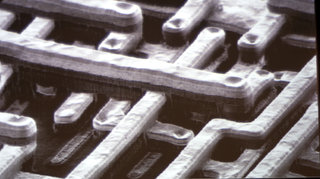

Transistors are the solid state devices that control the flow of electrical current. Slightly more

graphically, if you look close up on a microchip,

you see a structure that looks a bit like this. Most

of what you're seeing in this picture is metal; the shiny bits are metal layers. If you look

carefully you can see 3 different metal layers stacked here. Today's chips have 8 to 12 metal layers. There

are a couple of transistors right there in the bottom, towards the left. Transistors are in the silicon then the metal

layers stacked on top. If you magnify the chip in your mobile phone to the same scale then the whole chip

would be 2-3 square miles at this level of detail, in 10 years time the transistors will be smaller, and it

would be about 100 square miles for this level of detail. If you like analogies the job of designing the

wiring of one of today's microchips

is roughly equivalent to designing the road network of the planet from scratch, including footpaths. That's how

many wires there are in today's microchips. Yet we can buy them for £20 and throw them away when we get tired of the

colour.

Transistors are the solid state devices that control the flow of electrical current. Slightly more

graphically, if you look close up on a microchip,

you see a structure that looks a bit like this. Most

of what you're seeing in this picture is metal; the shiny bits are metal layers. If you look

carefully you can see 3 different metal layers stacked here. Today's chips have 8 to 12 metal layers. There

are a couple of transistors right there in the bottom, towards the left. Transistors are in the silicon then the metal

layers stacked on top. If you magnify the chip in your mobile phone to the same scale then the whole chip

would be 2-3 square miles at this level of detail, in 10 years time the transistors will be smaller, and it

would be about 100 square miles for this level of detail. If you like analogies the job of designing the

wiring of one of today's microchips

is roughly equivalent to designing the road network of the planet from scratch, including footpaths. That's how

many wires there are in today's microchips. Yet we can buy them for £20 and throw them away when we get tired of the

colour.

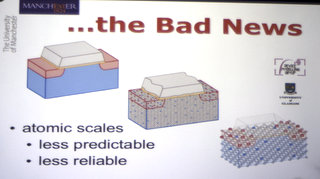

The bad news is this isn't going to go on for ever - we are approaching atomic scales. You get about

5 silicon atoms to the nanometre and so Intel's latest 20nm transistors are about 100 across

and as you approach atomic scales technology becomes less predictable and less reliable. So

the future faces the challenges of design with unreliable components. There are also problems

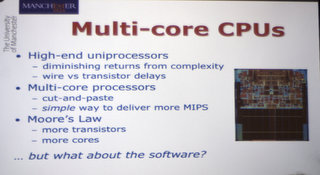

at the architecture level, anyone who buys a computer will know you can no longer get faster clock rates

on you computers, instead you have computers that have multiple processors on, many core processors.

The bad news is this isn't going to go on for ever - we are approaching atomic scales. You get about

5 silicon atoms to the nanometre and so Intel's latest 20nm transistors are about 100 across

and as you approach atomic scales technology becomes less predictable and less reliable. So

the future faces the challenges of design with unreliable components. There are also problems

at the architecture level, anyone who buys a computer will know you can no longer get faster clock rates

on you computers, instead you have computers that have multiple processors on, many core processors.

So an Intel system is a dual-core something or other, even mobiles phones are dual-core or quad-core.

This is because this is the only way we know to put more computer power into things. The main trend

of getting software development is simply failing because of power reasons. Moore's law still delivers

more transistors but now that's translated into more cores, which is all well and good except we still

have no real idea how to program for these things. The problem of programming parallel computer

systems is one of the holy grails of computer science, and people have been banging their heads

against this for 50 years and there is still no general solution. So we're in an interesting

position, the technology is perhaps unreliable, the only way forward is an architecture we don't know

how to use.

So an Intel system is a dual-core something or other, even mobiles phones are dual-core or quad-core.

This is because this is the only way we know to put more computer power into things. The main trend

of getting software development is simply failing because of power reasons. Moore's law still delivers

more transistors but now that's translated into more cores, which is all well and good except we still

have no real idea how to program for these things. The problem of programming parallel computer

systems is one of the holy grails of computer science, and people have been banging their heads

against this for 50 years and there is still no general solution. So we're in an interesting

position, the technology is perhaps unreliable, the only way forward is an architecture we don't know

how to use.

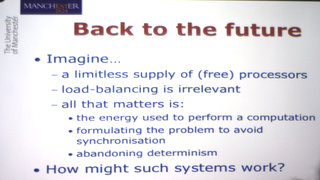

So I want to give you a quick glimpse into

some of the research we're doing on this, and in this research we're imagining a future where

we can have a limitless supply of free processors. This isn't particularly silly as the cost of the

ARM processor in your phone is now a few cents and still falling. We're making more of these processors

work, effectively called load balancing, that's irrelevant as they're cheap, so what I argue is

that we should be worrying about something else. Not making good use of expensive processors, what

we should be worrying about primarily is energy use to perform the computation. The energy is

a primary issue in computers, all the way from mobile phones to ???? If you buy a PC and you

work it fairly hard, you'll find in 3 years when you want to replace it, you will have spent more

on feeding it with electricity than you spent on the hardware, they cost more to run than to

buy now. This requires that we rethink our problems,

that's the focus of our current research because we think there's one example of a system

that displays the properties we're looking for and that is the thing inside each of our heads.

The brain is a highly parallel system made of unreliable components, but fundamentally we don't

know how it works.

So I want to give you a quick glimpse into

some of the research we're doing on this, and in this research we're imagining a future where

we can have a limitless supply of free processors. This isn't particularly silly as the cost of the

ARM processor in your phone is now a few cents and still falling. We're making more of these processors

work, effectively called load balancing, that's irrelevant as they're cheap, so what I argue is

that we should be worrying about something else. Not making good use of expensive processors, what

we should be worrying about primarily is energy use to perform the computation. The energy is

a primary issue in computers, all the way from mobile phones to ???? If you buy a PC and you

work it fairly hard, you'll find in 3 years when you want to replace it, you will have spent more

on feeding it with electricity than you spent on the hardware, they cost more to run than to

buy now. This requires that we rethink our problems,

that's the focus of our current research because we think there's one example of a system

that displays the properties we're looking for and that is the thing inside each of our heads.

The brain is a highly parallel system made of unreliable components, but fundamentally we don't

know how it works.

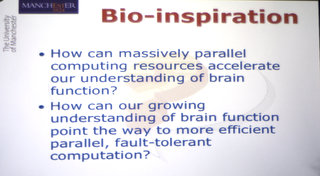

So this research is trying to address two big questions, can we

use the computing power we now have available to accelerate our understanding of how the brain works?

Can we use that understanding to work out how to build better computers?

So this research is trying to address two big questions, can we

use the computing power we now have available to accelerate our understanding of how the brain works?

Can we use that understanding to work out how to build better computers?

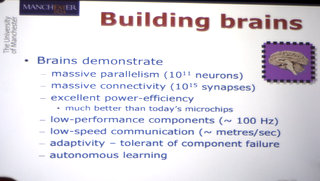

I've spent most of

my career designing chips, building conventional processors, why am I now talking about brains?

Because I think they address some of the issues that are facing computer design. They are

massively parallel, you have 100 billion neurons and a million billion connections. They

are much more power efficient than the best

electronics we know how to build. There are various ways of measuring that, but it's clear

that biology still have a big march over electronics in energy efficiency. It's made of slow

stuff, there's nothing inside your head that works on time scales much shorter than a millisecond,

whereas in this computer we measure time delays in picoseconds or 10s of picoseconds. So

there are huge differences of scale, biology is slow, the signals move slowly, and it's tolerant

to component failure. As you sit here listening to me, you'll lose about 1 neuron per second,

if that's your average neuron loss rate through adult life, don't panic, over the useful

life of your brain that's only 1-2% component loss and your brain can accommodate that quite

easily. Whereas this computer, with several million transistors, if it lost one it would be

critical to the function, the processor would come to an untimely end.

I've spent most of

my career designing chips, building conventional processors, why am I now talking about brains?

Because I think they address some of the issues that are facing computer design. They are

massively parallel, you have 100 billion neurons and a million billion connections. They

are much more power efficient than the best

electronics we know how to build. There are various ways of measuring that, but it's clear

that biology still have a big march over electronics in energy efficiency. It's made of slow

stuff, there's nothing inside your head that works on time scales much shorter than a millisecond,

whereas in this computer we measure time delays in picoseconds or 10s of picoseconds. So

there are huge differences of scale, biology is slow, the signals move slowly, and it's tolerant

to component failure. As you sit here listening to me, you'll lose about 1 neuron per second,

if that's your average neuron loss rate through adult life, don't panic, over the useful

life of your brain that's only 1-2% component loss and your brain can accommodate that quite

easily. Whereas this computer, with several million transistors, if it lost one it would be

critical to the function, the processor would come to an untimely end.

We don't know how to build computers that have this ability to tolerate component failure.

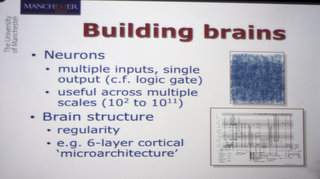

Brains are made of neurons, these are a bit like logic gates, they have multiple inputs

and single outputs and structure in there. Your brain is pretty similar at the back where

you do image processing, and the front where you do high-level language and high-level thinking.

These processes all run along similar substrates which suggests to me they use similar algorithms,

but we don't know what that means.

Brains are made of neurons, these are a bit like logic gates, they have multiple inputs

and single outputs and structure in there. Your brain is pretty similar at the back where

you do image processing, and the front where you do high-level language and high-level thinking.